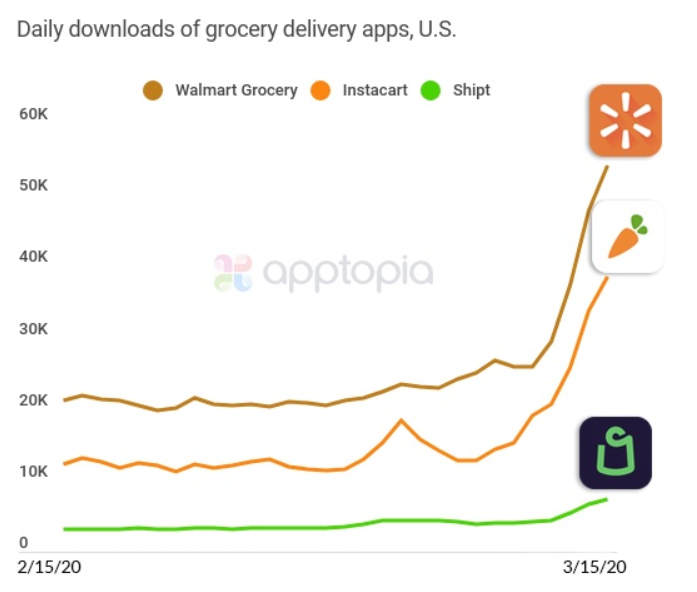

A common conversation topic among SafetyTech adopters including colleagues, clients, and partners is about predicting the next accident, incident, or even near miss by looking at early signals just in time for the right controls to put in place before something happens.

The ‘nirvana’ of Safety Data Analytics would be a little bit like what is presented in the movie ‘Minority Report’: to analyze near to real-time process data, people (fatigue, health, knowledge, engagement, etc) data, and operational environment data; and compare the readings with historical root cause analysis from previous events to identify similar patterns so that alarms will go off just in time for the “good guys” to be there and prevent an accident from happening, exactly like the precogs would tell Tom Cruise’s character to stop a crime just before the gun gets fired.

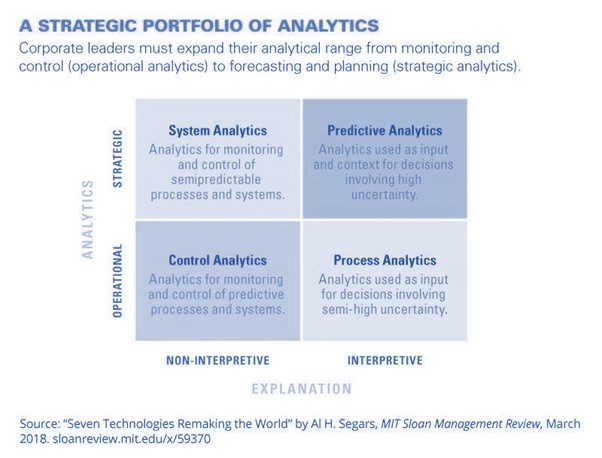

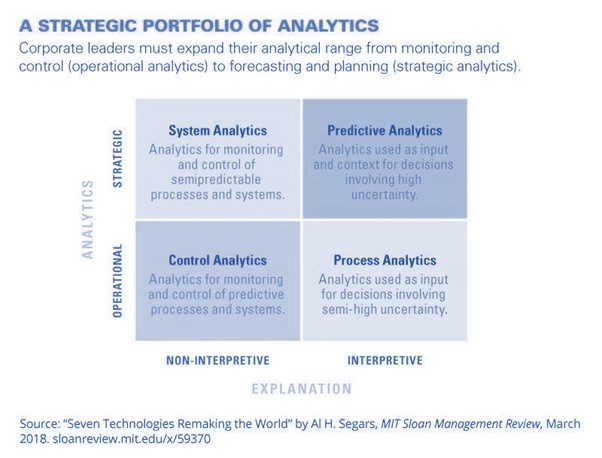

In his ‘Seven technologies remaking the world’, Albert H. Segars describes it as 'Strategic and interpretive' analytics.

After having analyzed multiple 2x2 charts before, our trained eyes quickly search for the ‘double plus’ quadrant (in this case: Strategic x Interpretive) as the way to go. Even the author suggests that leaders must expand their analytical range in that direction and, as a matter of fact, it makes sense, right?

Does it mean a predictive model is always the best immediate answer?

Where should organizations start and expand from?

I’ve been in consulting for a good number of years now and after having worked with a large number of organizations and cultures, I feel tempted to answer this question with a traditional ‘It depends…’, and here is why.

1. How reliable is the existing historical safety data (assets integrity, accident/incident investigation reports, maintenance records, risk assessments, etc)?

As mentioned earlier, predictive models have the ability to predict by matching new patterns with previously identified ones so that a suggestive action can be taken.

Typically, asset-related data including maintenance records and equipment operating KPIs tend to provide better historical data than incident investigation reports and hazard identification records.

A good root cause analysis of past events is determinant to allow reliable patterns to be established and better chances for reliable predictive analytics.

This means that the absence of reliable data points for establishing the reference patterns would influence the algorithm and lead to incongruent correlations and potential false alerts.

Here is where the expert knowledge plays a critical factor, the domain expertise can be used to enhance the model’s ability to find patterns and train the algorithm for better results.

This means that in many cases in which good safety practices such as incident reporting and investigation, hazard identification, risk assessments, etc are still being implemented, the model will need to rely more on expert assessment and less on cognitive computing.

2. How much ‘uncertainty’ is present in the current operational environment?

The uncertainty here could be related to the industry, technology, or culture.

Operating a new plant in a new cultural environment or bringing in a new process in an existing plant and culture, for example, could translate into some level of uncertainty about how effective the risk controls will be in the changing environment.

In simple terms, less known reference patterns mean a less reliable correlation.

A ‘predictable’ environment such as one that has been operating for many years at the same location and in the same culture, could require a model less interpretive than an environment where the efficiency of existing risk controls process/administrative and technology/engineering have not been put to test as yet.

This means the required level of sophistication of the algorithm could become less relevant depending on how predictable the environment is.

3. How mature is the organization in adopting new technologies and relying on a data-driven approach?

In the end, it all comes down to how the insights are transformed into actionable items and how these are acted on.

Organizations that have well-structured governance and decision-making boards are way more likely to fully benefit from a strategic and interpretive model that provides actionable insights than other organizations where these best practices are not in place.

When I think of Formula 1 racing, for example, drivers who consult their car performance with their teams’ real-time analysis of the data from multiple sensors in the car are more prone to get to first places in the race than drivers that cannot rely on the same advice or choose not to.

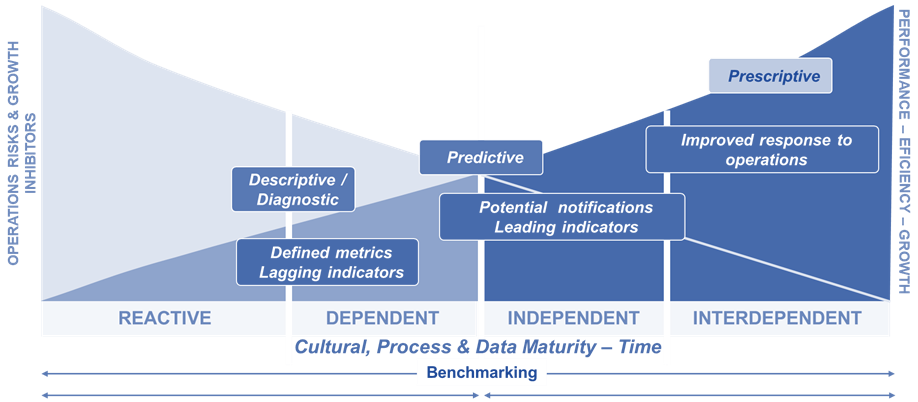

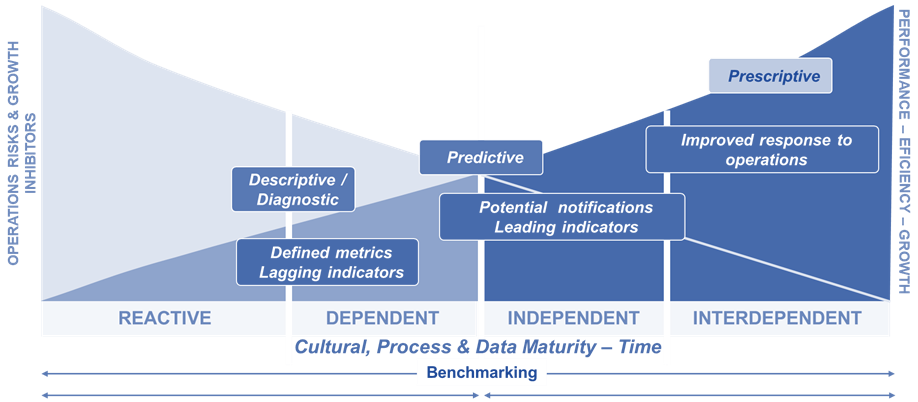

A good visual representation of the above is the below model that leverages both the dss+™ Bradley Curve™ (previously referred to in my article “The biggest cultural transformation in history”) and the incremental levels of sophistication of data analytics from descriptive to prescriptive.

While capabilities, behaviors, processes, and governance, start moving from reactive (compliance) to interdependent (value for safety), the 3 Vs (Volume, Velocity, and Variety) and the quality of the safety data increases so does the quality of the insights from the analytics.

At the same time, just like in a tandem bike, as the quality of the identified actionable items increases, organizations become more able to move faster and further away from just ensuring their “right to operate” to higher operational efficiency and maximization of assets performance.

So, where to start?

1. Make the operational data meaningful

Start by looking at the existing safety governance and performance monitoring process and ensure meaningful data is being captured and analyzed at the right forums (strong incident investigation process is a good starting point), leadership dashboards are also important at this stage although they will typically remain descriptive.

The goal is to instill a data-driven culture.

Typical questions that could be answered at this stage: “What happened?”, “How many events, how often, where?”, “What exactly is the problem?”

2. Broaden the data spectrum – data engineering

Start building the next level of sophistication of data capturing and consider digital forms, sensors, IoT devices and wearables, etc. Prioritize the areas or processes where the previous analysis has indicated a higher level of near misses or incident occurrences. Ensure the new data is used on incident analysis to support the root cause identification process and that the leadership dashboards are enhanced accordingly.

The goal is to uncover more events that can lead to a near miss or an incident and to start building reference patterns while building the path for a predictive process.

Typical questions that could be answered at this stage: “Why is this happening?”, “What if these trends continue?”, “What actions are needed?”

3. Work on data modeling and analysis – data science

With the support of data scientists and statistical tools start looking at ways of modeling the data.

Expert knowledge is key here as a way to validate the results obtained by the different techniques (typically regression and clustering given the type of relationships) and improve the patterns identification process.

The more inputs related to the specifics of the operational reality the better, as culture, environment, and asset integrity are determinant factors in predicting undesirable safety events.

It’s a good moment to add operational/process data as long these are helping with providing references related to the operational environment.

4. Make sure the visualization enables insights and data-driven decisions

This could be the right moment to invest in an interactive visual interface system (do not forget the user experience here, this is not supposed to be used by mathematicians).

The goal is to start predicting and continuously improving the process of learning from the data.

Typical questions that could be answered at this stage: “What will happen next?”, “When will it happen?”, “What should be done to stop the chain of events?”, “which of our controls are vulnerable?”

A successful journey requires making sense of each step taken, celebrating the successes, and funding the next step with the benefits achieved in the previous one.

Enjoy the ride!

Vinicius Branchini

***

As a Global Innovation and Digital Manager with dss+, Vinicius Branchini is an experienced operations consultant, well versed in risk management and cultural transformation. Throughout his career, Vinicius has worked with multiple clients to protect their people and improve their operations. Given his experience in engaging people and his passion for innovation, Vinicius can advise on how to ensure that corporations and their people adopt new technologies in their work environment.